The most significant organisational risk emerging in the age of AI has very little to do with the technology itself. It is not hallucination, bias, or job displacement - although these issues matter. The deeper threat is quieter, slower, and far more corrosive. It is the gradual erosion of human cognitive capability inside organisations that have embraced AI without rethinking how people think, learn, and make decisions.

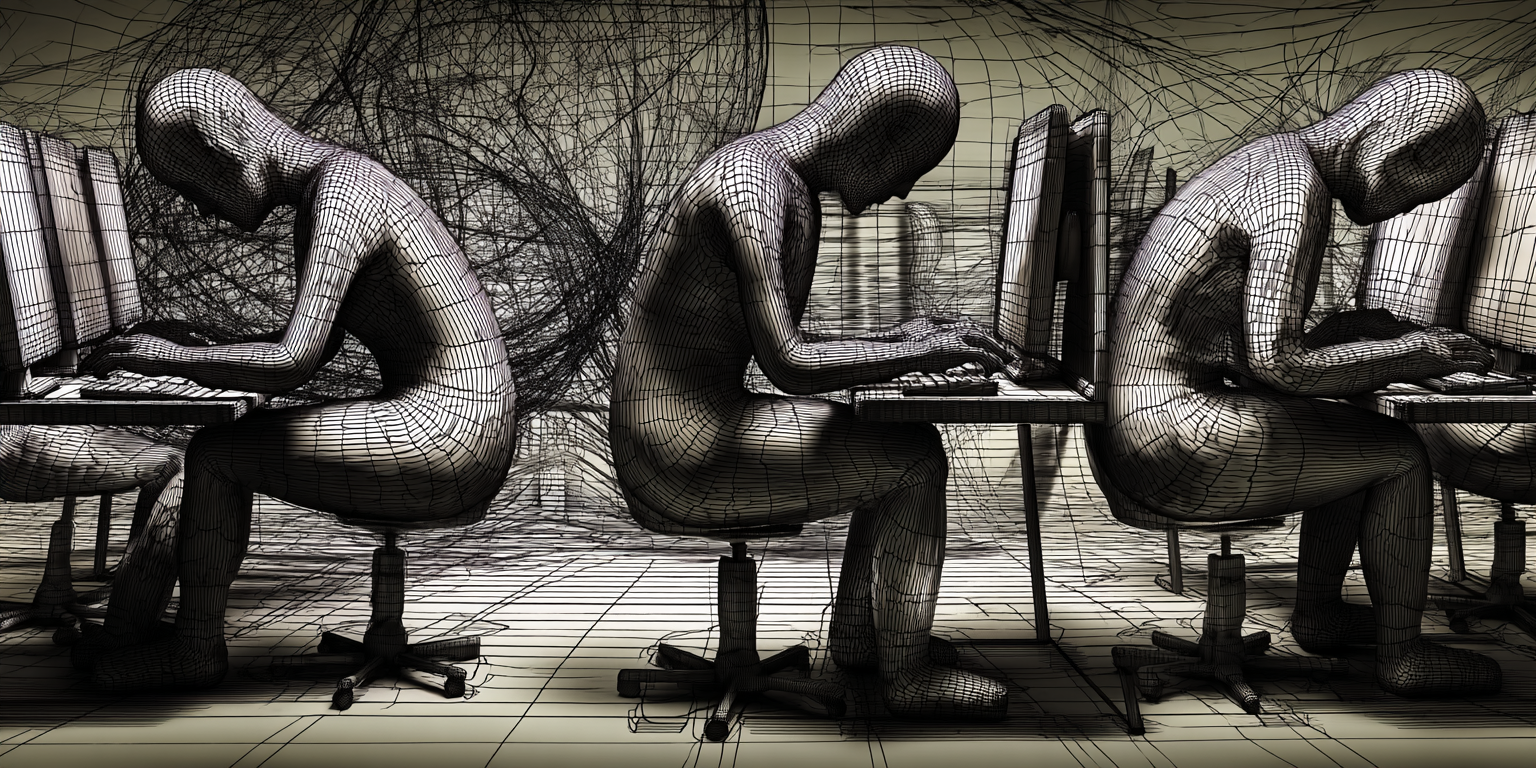

Leaders assume that as they automate tasks, human intelligence remains intact, ready to be redeployed toward strategic or creative work. In reality, the opposite is happening. Teams are becoming overly dependent on AI systems not as tools, but as substitutes for cognitive effort, in ways that diminish their capacity for analysis, interpretation, and independent judgement.

This phenomenon - Cognitive Atrophy - is not a philosophical abstraction. It is already visible in the way people justify decisions by pointing to AI output and the way employees begin to distrust their own expertise because the system “sounds smarter.” The risk is not dramatic or immediate. It creeps. It accumulates. And it leaves organisations with a workforce technically empowered but intellectually weakened.

The Outsourcing of Judgement

To understand why this is happening, we have to acknowledge a difficult truth: AI outperforms humans on many of the cognitive traits we mistakenly believe define intelligence.

AI integrates information faster than we can. It generates coherent responses without fatigue. It synthesises knowledge at scale. It maintains focus without distraction. In our Conscious Audit research, we identified thirteen traits associated with conscious cognition; AI now matches or exceeds human ability in the majority of them.

And while this does not make AI conscious, it does change how humans relate to thinking itself. When people encounter a system that performs intellectual labour with such speed and fluency, it becomes natural - almost inevitable - to defer to it. Not maliciously. Not lazily. Simply because the path of least resistance feels rational.

The Competence/Confidence Gap

The lack of structural support accelerates this surrender:

- The Literacy Lag: Only a quarter of companies have an AI training programme, and 80% of employees classify their AI understanding as only beginner or intermediate. We are handing people sophisticated tools without teaching them the mechanics of how they work or fail.

- The Trust Deficit: This lag creates a crisis of confidence. 33% of employees lack confidence in the results produced by AI tools, yet 47% have no idea how to achieve the productivity gains expected of them.

When expertise is low and expectations are high, people gravitate toward the system's certainty. The more confidently people lean on AI, the more their own mental muscles begin to dull. Judgement becomes externalised. Original thought becomes rarer. Problem-solving becomes narrower, shaped by the assumptions embedded within the tools they depend on.

The Atrophy Effect: How Judgement Fades

Timeline of AI Reliance vs Independent Judgement

View complete data visualisation with all sections

How Atrophy Manifests in the Organisation

The consequences of this quiet surrender are already impacting decision quality and resilience. This erosion shows up in clear, observable indicators across transformation programmes.

Visible Indicators of Atrophy

- Rationale Substitution: Teams who can explain the output but not the reasoning. Asking "Why did you choose this?" now returns: "Well... that is what ChatGPT said."

- Volume Over Value: Leaders who believe they are being strategic because they are generating more documents. Volume has replaced genuine thinking.

- False Certainty: Overconfident staff unable to detect subtle errors. The fluency of AI output creates an illusion of correctness.

- Intellectual Homogenisation: Decline in original thought. Work begins to sound the same - homogenised by systems trained on the same public data.

- Identity Dissolution: Organisations losing their internal decision-making identity. When your culture adopts machine thinking, your competitive advantage dissolves.

The Mechanisms of Decline

The visible indicators are driven by deeper mechanisms of decline:

1. Loss of Cognitive Patience

The brain adapts to machine speed. Over time, people lose the ability to stay with a problem long enough to understand it, not just solve it. When thinking becomes reactive, depth is sacrificed.

2. Strategic Judgement Erosion

In the strategic conversation, decisions now rest on “what the model came back with,” not on a robust internal debate informed by human experience and organisational values.

3. Cultural Deference

Organisations develop a cultural reflex of deferring upward - not to a senior leader, but to a model. Without noticing, the organisation internalises a new hierarchy of intelligence, one where the human mind becomes a reviewer rather than an originator.

4. Psychological Fragility

Burnout, ironically, sits on the other side of this. When cognitive skills weaken, complex tasks feel heavier. Ambiguity feels threatening. People feel less competent, less confident, and more fragile. “AI supports me” slowly becomes “AI carries me,” and that shift creates emotional exhaustion that no amount of automation can fix.

The danger is not that people use AI. The danger is that they stop using themselves.

The Solution: Cognitive Strengthening and Slow Thinking

Preventing cognitive atrophy does not mean rejecting AI; it means designing human-machine interaction consciously. The goal is not to reduce reliance on AI, but to preserve the cognitive capacities that make human judgement irreplaceable.

1. Mandate the Cognitive Safeguard

Workflow must be redesigned so AI supports thinking, rather than substitutes for it. Teams should be required to articulate their own hypotheses, perspectives, and interpretations before consulting a system. This forces cognitive engagement before automation enters the conversation.

- Actionable Step: Implement AI-Augmented Decision Protocols. AI should frame options. Humans should choose direction. The final sign-off must include a documented human rationale, re-centring accountability and agency.

2. Reintroduce Friction - Strategically

Friction is not the enemy; friction builds mastery. Leaders must defend the parts of work that require time - not because humans are inefficient, but because understanding cannot be rushed.

- Actionable Step: Conduct "AI Blackout Drills." Require teams to solve complex problems without access to the primary models. This pressures the cognitive system to rebuild its own capability, exposing capability gaps early while proving that the human still holds the master key.

3. Build Cognitive Literacy, Not Just Tool Proficiency

Training must shift from "how to use the tool" to "how to interrogate the output." Without this understanding, humans cannot meaningfully challenge or contextualise the outputs they receive.

- Actionable Step: Adopt the Conscious Audit framework. Train teams to ask: Did the AI just think for me? Where is my judgement in this workflow? Which of the 13 traits am I relying on - and which am I neglecting? This restores meta-awareness and protects intellectual integrity.

4. Performance Metrics Must Value Depth

If volume is the only metric, atrophy is guaranteed. Organisations need to rediscover the value of slow thinking.

- Actionable Step: Stop measuring output volume. Start measuring quality of reasoning, clarity of argument, and originality of thought. When AI compresses information, depth becomes a competitive advantage, and cognitive patience becomes a skill.

The Final Line

AI will only weaken human capability if we surrender thinking to it. It will only weaken organisations that treat human judgement as an overhead rather than an asset. The companies that thrive in this new era will be the ones that refuse to outsource their minds.

You don’t preserve critical thinking by rejecting AI. You preserve it by refusing to surrender your organisation’s mind to automation. Because once a company loses its thinking, it loses its sovereignty.

AI will keep getting smarter. Your people must too.

| Statistic | Source | Context |

|---|---|---|

| 80% of employees classify AI understanding as beginner/intermediate | SHRM | Beginner/Intermediate AI Literacy |

| 33% lack confidence in results produced by AI tools | SHRM | Trust Deficit in AI output |

| 47% of employees using AI have no idea how to achieve productivity gains | Upwork Inc. | Productivity Confusion Gap |

| 25% of companies have an AI training programme | UNLEASH | Organisational training lag |

Danielle Dodoo is a speaker and advisor on Machine Consciousness and Organisational Coherence. She helps leaders navigate the transition to autonomous intelligence without losing their human core.